.webp&w=3840&q=75)

How ClickUp Enables Outcome-Based Project Management (Not Just Task Tracking)

🕓 February 15, 2026

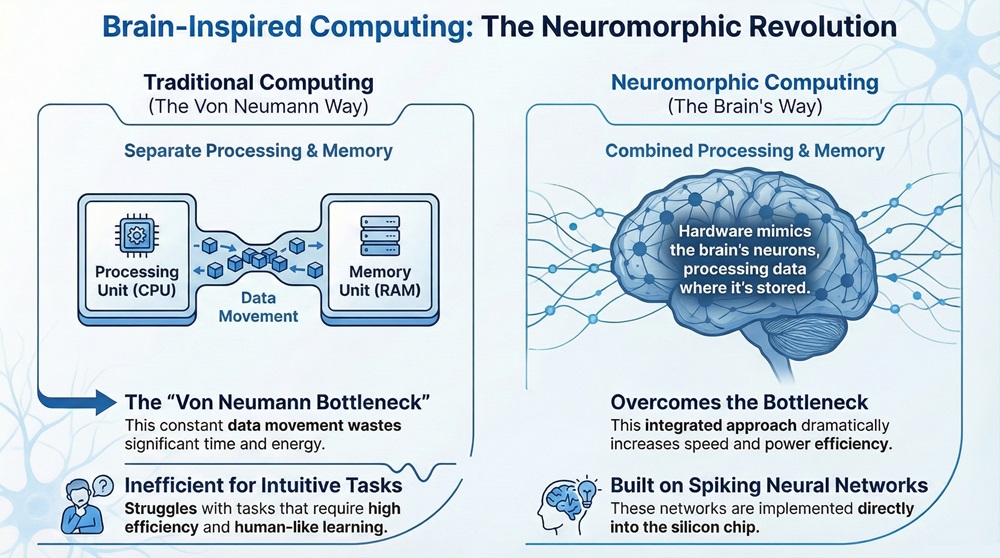

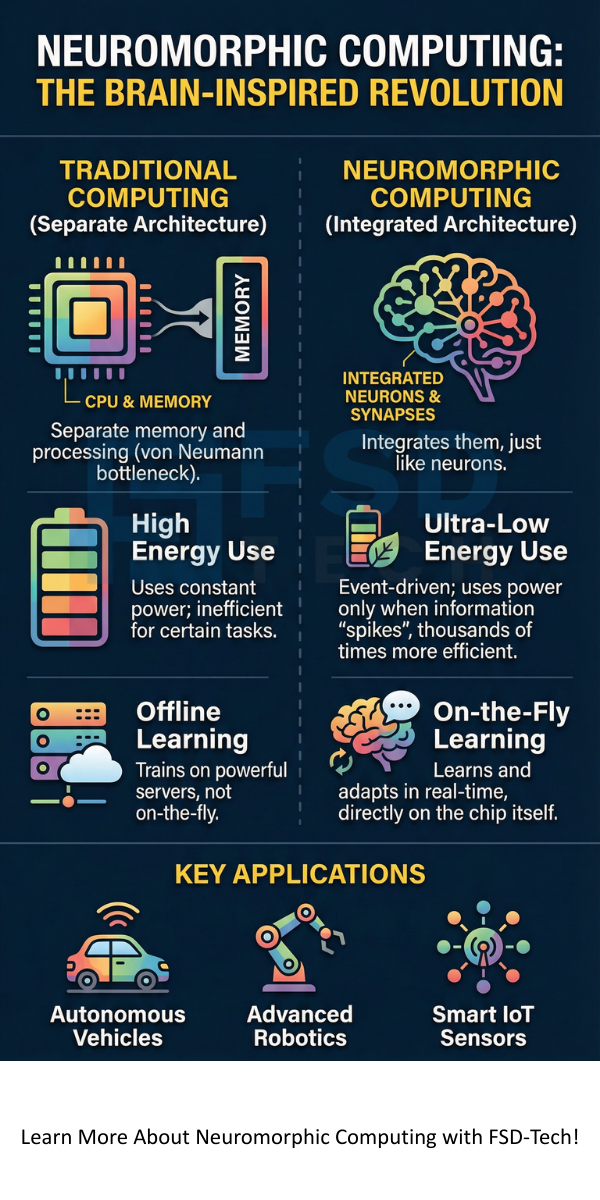

You constantly hear about the limits of traditional computing. Our current processors, based on the von Neumann architecture, struggle with tasks requiring high efficiency and human-like intuition. They separate the memory and processing units, forcing a back-and-forth data movement that wastes a lot of time and energy—this is the von Neumann bottleneck.

Imagine a computer that works like your brain. A machine that processes information and learns with the energy efficiency and speed of the human nervous system. This is the core idea behind neuromorphic computing. This specialized technology moves beyond standard computer design by creating hardware that directly mimics the structure and function of biological neurons and synapses.

Do you want to know how we can make computers think faster, consume less power, and learn on their own? This technology provides a compelling answer, promising to revolutionize everything from advanced artificial intelligence (AI) to small, autonomous devices. We will explore how this exciting field of neuromorphic computing and engineering is poised to change the computing market forever.

What is neuromorphic computing exactly? Neuromorphic computing can be defined as an approach to computer engineering where elements of a computer system directly model the neural structures present in the human brain. This technology aims to overcome the speed and power limitations of conventional computing hardware by creating neuromorphic chips that process and store information in the same physical location, just like neurons.

The name, neuromorphic computing architecture, implies a fundamental redesign of the chip layout. Instead of separate processors and memory, these systems feature spiking neural networks (SNNs) implemented directly in the silicon.

Neuromorphic Questions? Contact us

The specialized neuromorphic computing hardware relies on unique components that replicate biological function. The following are essential elements:

Also Read: Cyber Threat Intelligence (CTI) in Cybersecurity

The way neuromorphic computing operates is truly different. Instead of the synchronous, clock-driven operations of standard CPUs, how neuromorphic computing works depends on asynchronous, event-driven processes.

This asynchronous spiking is key. It dramatically reduces the energy used, making it ideal for mobile and edge computing applications.

You probably hear about neural networks all the time when people discuss AI. It is vital to understand the difference between neuromorphic computing vs neural networks in their conventional, software-only form.

| Basis for Comparison | Conventional (Von Neumann) Computing | Neuromorphic Computing |

|---|---|---|

| Architecture | Separate CPU and Memory (Von Neumann bottleneck) | Integrated Processing and Memory (Brain-like) |

| Processing Unit | Central Processing Unit (CPU) | Artificial Neurons and Synapses |

| Data Flow | Continuous data streams (Voltage or floating-point numbers) | Discrete, time-dependent spikes |

| Energy Consumption | High, constantly powered by a clock | Very low, event-driven (only uses power when spiking) |

| Learning Mechanism | Global, backpropagation-based, requires large datasets | Local, Spike-Timing-Dependent Plasticity (STDP), continuous |

| Best Suited For | High-precision arithmetic, complex general-purpose tasks | Real-time sensor processing, pattern recognition, edge computing |

The core distinction is that traditional neural networks (ANNs) are software algorithms that run on von Neumann hardware. Neuromorphic computing is an entirely new hardware paradigm designed specifically to run Spiking Neural Networks (SNNs) efficiently.

Also Read: What is Edge Computing? How it Differs from Cloud Computing?

The specialized characteristics of neuromorphic computing—low power, real-time learning, and event-driven operation—make it essential for a wide range of future applications. Neuromorphic computing uses will revolutionize systems that need intelligence without a large power source.

Real-Time Sensory Processing

Since the technology models how a brain processes sensory data, it excels at immediate response tasks.

Edge AI and Internet of Things (IoT)

The minimal power requirement changes the game for small devices.

Advanced Scientific Simulation

Neuromorphic computing can help us learn more about the brain itself.

Also Read: What is Network Traffic Analysis (NTA) in Cybersecurity?

The convergence of two powerful fields, quantum neuromorphic computing, explores how to use the principles of quantum mechanics to enhance neuromorphic systems. While still highly experimental, the aim is to leverage quantum effects for even faster, more complex, and more powerful synaptic weight adjustments, potentially surpassing even the most advanced classical neuromorphic computing technology.

Is neuromorphic computing the future? The rapid development and massive investment suggest a resounding yes. The neuromorphic computing market is experiencing significant growth as major technology neuromorphic computing companies dedicate substantial resources to commercializing the technology.

The conceptual foundation for neuromorphic computing was established in the late 1980s. Carver Mead, a professor at Caltech, formally coined the term while exploring how to build integrated circuits that mimic biological neural and sensory systems. This early work led to the creation of silicon retina and cochlea, laying the groundwork for the advanced neuromorphic computing hardware we see today.

The shift to this new architecture provides essential advantages over traditional methods. The benefits of neuromorphic computing directly address the biggest challenges in modern AI and mobile computing.

Also Read: What Is Application Security? Tools, Testing & Best Practices

Neuromorphic computing is far more than just a theoretical concept; it is an active and essential field of engineering that promises to break through the limitations of current computer design. By mimicking the event-driven, low-power operation of the brain, this technology delivers superior energy efficiency and real-time learning capabilities essential for the next generation of AI.

The future of advanced, always-on artificial intelligence depends on our ability to create systems that can function autonomously and efficiently in the real world. Neuromorphic computing technology provides a clear path to achieve this.

Does your company need to move its smart devices to the next level of energy efficiency and autonomous intelligence? Our team of neuromorphic computing and engineering experts can help you assess and integrate this cutting-edge technology into your product line, ensuring you lead the neuromorphic computing market with innovative, low-power solutions.

Connect with us today to start designing your next-generation autonomous system.

An ANN is a typical software model where neurons transmit continuous numerical values (like 0.5 or 0.9) to each other. An SNN, used in neuromorphic computing, is a system where neurons transmit discrete, time-dependent electrical pulses (spikes). This spiking action is what makes the SNN so much more power-efficient.

For general-purpose tasks like large-scale data processing or rendering graphics, traditional GPUs are still faster. However, for specialized tasks like real-time sensor processing, pattern recognition, and learning on the edge, neuromorphic computing hardware is much more efficient and can provide near-instantaneous results using far less energy.

The brain can perform complex tasks, like visual recognition and decision-making, while consuming only about 20 watts of power. A modern supercomputer performing the same tasks might consume thousands of times more energy. The brain’s massive parallelism and combined memory/processing structure offer the model for energy-efficient, powerful intelligence that neuromorphic computing aims to achieve.

Surbhi Suhane is an experienced digital marketing and content specialist with deep expertise in Getting Things Done (GTD) methodology and process automation. Adept at optimizing workflows and leveraging automation tools to enhance productivity and deliver impactful results in content creation and SEO optimization.

Share it with friends!

share your thoughts