.webp&w=3840&q=75)

How ClickUp Enables Outcome-Based Project Management (Not Just Task Tracking)

🕓 February 15, 2026

Let us understand a critical challenge in the digital era: the rise of hyper-realistic forged media. You see increasingly sophisticated fake content—videos, audio, and images—that looks and sounds convincingly real. This is the work of deepfake technology, a powerful tool using Artificial Intelligence (AI) to create synthetic media that can deceive even careful observers.

This widespread use of manipulated content raises a serious question: What is Deepfake Detection?

Deepfake detection is the technology that helps us tell the real from the fake. It is an essential line of defense. The field involves a collection of tools and techniques designed to identify and flag media that has been synthetically generated or altered, often with malicious intent.

We will explore how this technology works, the specific signs it looks for, and the sophisticated algorithms that make it all possible.

To understand the solution, we must first clearly define the problem.

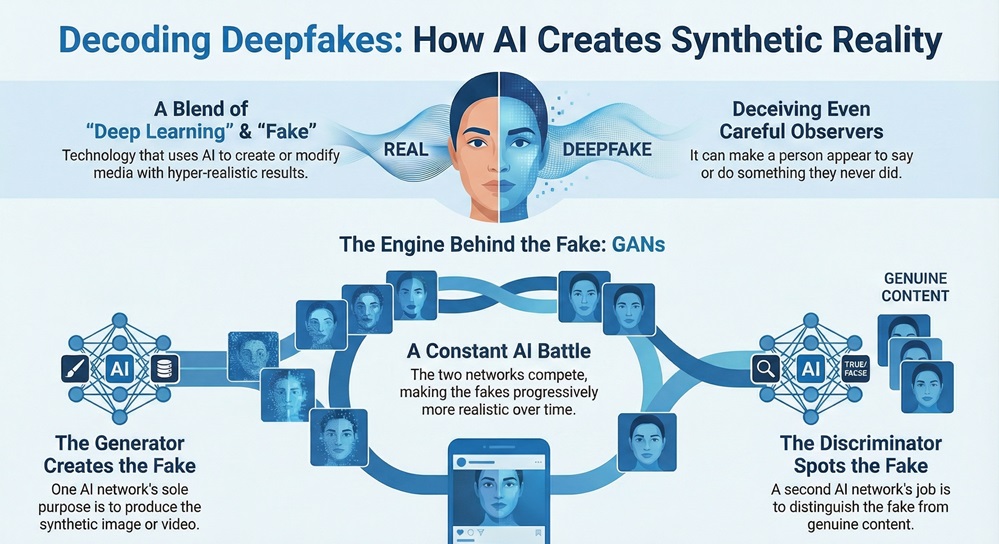

The word deepfake is a blend of "deep learning" and "fake." This technology leverages deep learning, a type of AI that uses complex neural networks, to create or modify media. Deepfake technology can make a person appear to say or do something they never did.

The core principle relies on a structure known as a Generative Adversarial Network (GAN).

The two systems engage in a constant, competitive training process. The Generator improves its fakes to fool the Discriminator, while the Discriminator improves its ability to spot subtle flaws.

This back-and-forth cycle continues until the synthetic media becomes remarkably realistic, making it incredibly hard for a human observer to detect the manipulation.

In AI, the deepfake concept refers to the output of deep learning models, specifically those trained to map and transfer facial expressions, voices, or entire identities onto source media. This process uses algorithms like autoencoders or GANs to produce content where the subject's face is swapped, their expressions are altered, or their voice is cloned.

A deepfake video typically involves face swapping or expression manipulation. Face swapping superimposes one person's face onto another's body. Expression swapping changes the facial expressions of the subject in the video to match another person's movements.

Similarly, deepfake audio, or a "voice deepfake," uses AI to synthesize a voice, allowing a fraudster to make an individual appear to say anything they want. This has significant implications for financial fraud and social engineering scams.

Also Read: What is TACACS+ Protocol? Features & RADIUS Comparison

Deepfake detection refers to the specialized technology and methodologies that aim to identify and verify the authenticity of digital media. Deepfake detection technology works by analyzing media for artifacts, inconsistencies, and statistical traces left behind during the AI generation process. These tools ensure that digitally presented identities, evidence, and news are reliable and have not been manipulated.

Deepfake detection is an ongoing race against the creators of synthetic media. As deepfakes become more sophisticated, deepfake detection algorithms must evolve to find increasingly subtle clues.

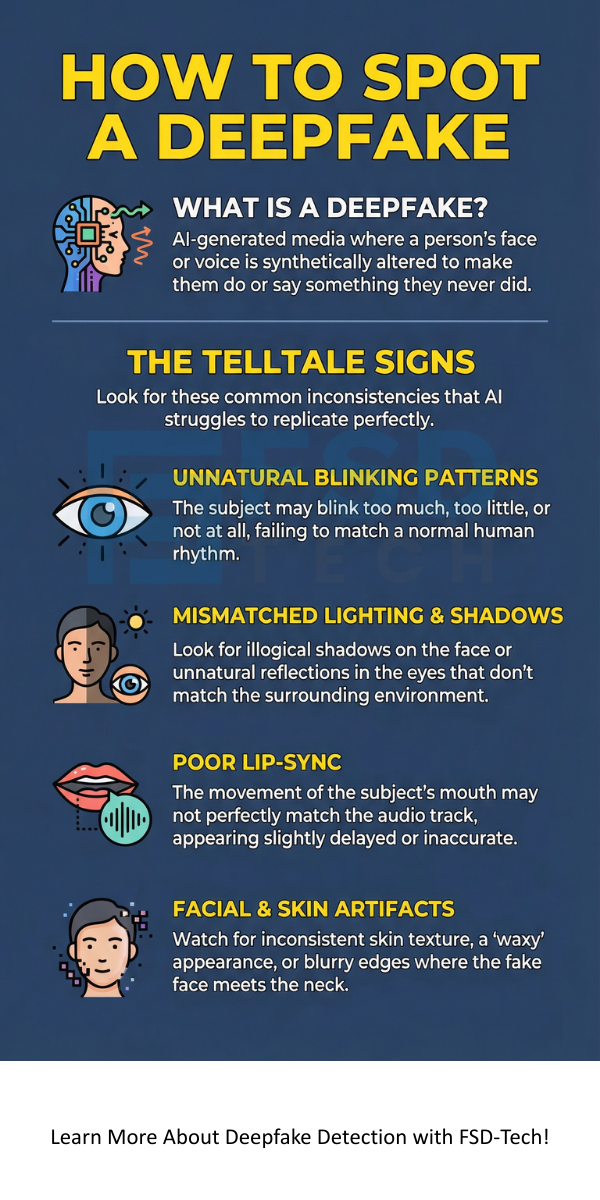

To understand how to detect deepfake videos, we analyze the specific types of anomalies that AI models consistently struggle to replicate perfectly.

The most common way to spot a deepfake involves looking for physical or physiological oddities. A deepfake detection system often focuses on these tell-tale signs:

Also Read: What is Computer Virus? Types, Symptoms & Protection

More advanced methods of AI deepfake detection delve into the digital fabric of the media file itself.

When detecting a deepfake video, it is vital to look at the flow of the information, not just a single image.

The technological arms race requires highly advanced and specialized detection methods. The most effective deepfake detection system utilizes deep learning models trained on massive, diverse datasets of both real and fake media.

| Basis for Comparison | Physiological/Physical Artifacts | Signal/Compression Artifacts | Spatio-Temporal Inconsistencies |

|---|---|---|---|

| Focus Keyword | Deepfake Detection Techniques | Deepfake Detection Algorithms | Deepfake Detection System |

| Primary Focus | Human-visible flaws, biometrics | Digital noise, pixel-level traces, GAN fingerprints | Movement flow, audio-visual sync, frame-to-frame logic |

| Key Inconsistencies | Unnatural blinking, irregular facial expressions, incorrect shadows. | Inconsistencies in compression level, Photo-Response Non-Uniformity (PRNU) noise. | Mismatched lip-sync, jerky head movements, uncoordinated body motion. |

| Core Algorithm Type | Convolutional Neural Networks (CNNs) focused on facial features and key landmark points. | CNNs, Statistical models, and forensic analysis tools. | Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, Optical Flow analysis. |

| Advantage | Effective at identifying basic face-swaps; directly targets biological flaws. | Highly robust against slight post-processing; targets the source of the forgery. | Essential for video content; captures the dynamic flaws of the fake media. |

| Limitation | Less effective when deepfake quality is high or post-processing is applied. | Can be defeated by sophisticated forgery models that clean up their output. | Relies on the content being a video; less applicable to static images. |

Deepfake detection is driven primarily by three types of neural networks:

1. Convolutional Neural Networks (CNNs)

2. Recurrent Neural Networks (RNNs)

3. Autoencoders and Variational Autoencoders (VAEs)

Also Read: What is Attack Surface Management (ASM)? How it Works?

Creating effective deepfake detection systems requires vast amounts of data. This need leads to efforts like the Deepfake Detection Challenge (DFDC), which provides large, diverse datasets of both authentic and manipulated videos. Training models on these datasets ensures the deepfake detection algorithm can generalize across various manipulation methods and different subjects, making the technology robust.

The need for a reliable deepfake detection system has led to the growth of specialized companies and tools. These solutions apply the advanced algorithms we have discussed to high-stakes, real-world scenarios.

Deepfake detection technology plays a vital role in several industries.

The solutions available range from commercial enterprise platforms to open-source libraries used by researchers.

So, with the above discussion, we can say that deepfake detection is far more than just a passing technological trend; it is a critical requirement for maintaining trust and security in the digital world. The technology of creating realistic fake media, or what is deep fakes, continues to advance, but so too does the science of detecting it.

We have seen that a robust deepfake detection system does not rely on a single solution. It is based on a combination of techniques: analyzing subtle physiological flaws, identifying signal-level artifacts, and looking for spatio-temporal inconsistencies across video frames. The most advanced systems leverage the power of deep learning models like CNNs and RNNs to find flaws invisible to the human eye.

Our company remains focused on securing your digital interactions. We ensure that our systems leverage the latest advancements in AI deepfake detection to offer a trustworthy and secure environment. Considering the high-stakes risk of synthetic media, we commit to continuously updating our technology, guaranteeing that you always have access to the most secure and reliable protection available.

Stop Deepfake Attacks Contact FSD-Tech today

Deep fakes are primarily used to spread disinformation, commit financial fraud by impersonating executives, bypass biometric security measures for account takeover, and create non-consensual explicit content which causes severe reputational damage. This means that the technology enables high-stakes scams and malicious manipulation.

Deepfake technology works on the principle of a Generative Adversarial Network (GAN) or Autoencoders. A generator AI creates the fake media, while a discriminator AI attempts to tell it apart from the real media. The two networks compete, resulting in the production of highly realistic, synthetic content that is difficult to distinguish from genuine media.

The biggest challenge is the continuous evolution of deepfake technology. This is often described as an "arms race." As soon as a deepfake detection algorithm learns to spot one type of artifact, the next generation of deepfakes eliminates that flaw, forcing the detection models to constantly adapt and train on newer, more sophisticated examples.

The accuracy of a deepfake detection system can vary widely, but state-of-the-art models, particularly those using advanced deep learning networks like XceptionNet or EfficientNet, often demonstrate an Area Under the Curve (AUC) of over 95% on public benchmark datasets. However, performance depends heavily on the quality and type of deepfake being tested.

Surbhi Suhane is an experienced digital marketing and content specialist with deep expertise in Getting Things Done (GTD) methodology and process automation. Adept at optimizing workflows and leveraging automation tools to enhance productivity and deliver impactful results in content creation and SEO optimization.

Share it with friends!

share your thoughts