Inside Cato’s SASE Architecture: A Blueprint for Modern Security

🕓 January 26, 2025

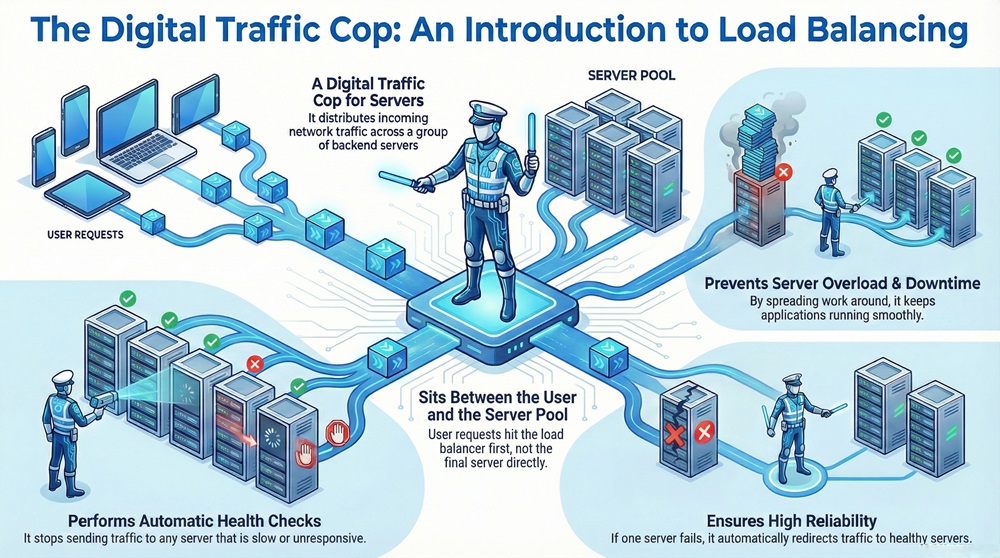

A load balancer acts as a digital traffic cop for your servers. Imagine a busy restaurant with only one waiter. If ten tables arrive at once, that waiter will struggle. Customers will wait too long for food. Some might even leave. Now, imagine a host at the front door who sends each guest to one of five different waiters. Everyone gets served faster. This is exactly what a load balancer does for websites and applications.

In the world of technology, a load balancer distributes incoming network traffic across a group of backend servers. These servers are often called a server farm or a server pool. By spreading the work around, a load balancer ensures that no single server becomes overwhelmed. This process keeps applications running smoothly and prevents downtime.

But how does it handle thousands of requests every second? Does it just pick a server at random, or is there a smarter method involved? To understand this, we must look at the core mechanics of how a load balancer functions within a network.

A load balancer in networking is a device or software that sits between the user and the server pool. When you type a website address into your browser, your request does not go straight to the final server. Instead, it hits the load balancer first.

The load balancer manages the flow of data to optimize resource use. It improves the response time of the application. If one server fails, the load balancer redirects traffic to the remaining healthy servers. This creates a highly reliable system. Without a load balancer, a single server crash could take your entire website offline.

Is a load balancer just a simple switch? No, it is much more. It performs "health checks" on every server in the pool. If a server is slow or unresponsive, the load balancer stops sending traffic to it. Once the server is fixed, it automatically joins the rotation again.

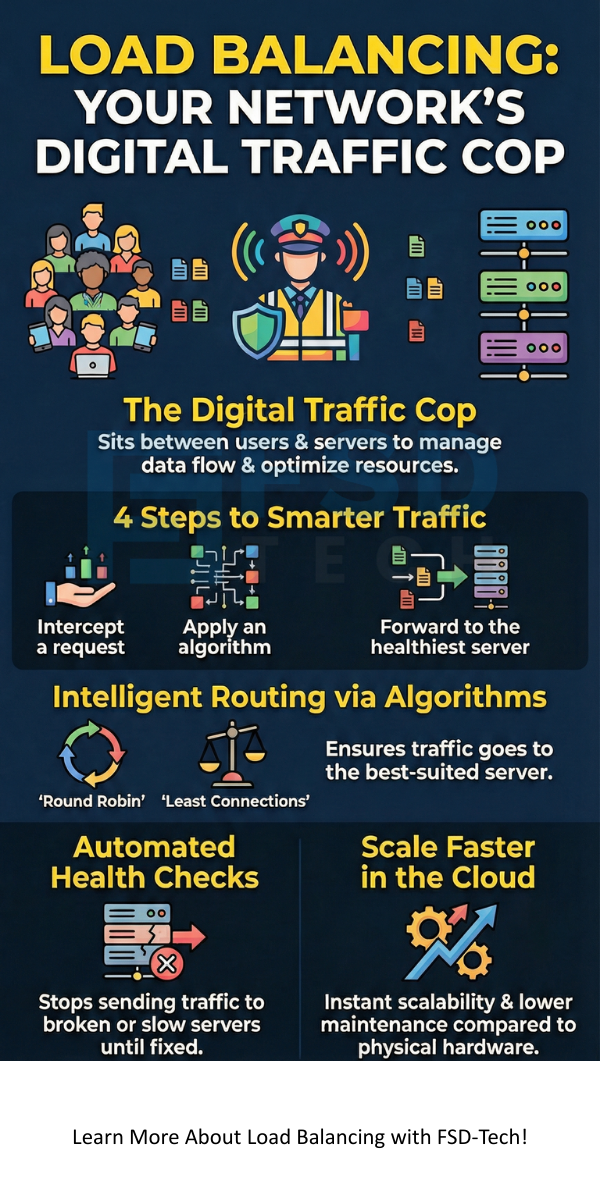

To understand what is load balancer and how it works, we can break the process into four simple steps:

The server processes the request and sends the data back to the load balancer. Finally, the load balancer passes that data back to the user. This cycle happens in milliseconds. It happens so fast that you never notice the middleman.

Also Read: What is Cloud Access Security Broker (CASB)?

| Basis for Comparison | Hardware Load Balancer | Software Load Balancer | Cloud Load Balancer |

|---|---|---|---|

| Definition | Physical load balancer device installed in a rack. | Application installed on standard servers. | Managed service provided by cloud vendors. |

| Cost | High initial investment for hardware. | Lower cost; relies on existing hardware. | Pay-as-you-go pricing model. |

| Scalability | Limited by physical hardware capacity. | Highly scalable through software updates. | Instantly scales with traffic spikes. |

| Maintenance | Requires physical space and cooling. | Requires manual configuration and patches. | Managed entirely by the provider. |

| Deployment | On-premise data centers. | Local servers or virtual machines. | Load balancer in cloud computing. |

Load balancer types are usually categorized by where they sit in the Open Systems Interconnection (OSI) model. Understanding these levels helps you choose the right tool for your specific needs.

Layer 4 Load Balancers

A Layer 4 load balancer works at the transport level. It uses data from network protocols like TCP or UDP. It does not look at the actual content of the message. It only looks at the IP address and the port number. Because it does not "read" the data, it is extremely fast and efficient.

Layer 7 Load Balancers

A Layer 7 load balancer works at the application level. This type of load balancer is much smarter. It can look at HTTP headers, cookies, and even the content of the message. For example, it can send requests for images to one server and requests for videos to another. This allows for very precise traffic management.

Global Server Load Balancing (GSLB)

GSLB extends the concept across multiple geographic locations. If you have data centers in New York and London, GSLB sends the user to the closest one. This reduces "latency," which is the delay you feel when a website takes a long time to load.

A load balancer algorithm is the set of rules that determines where traffic goes. There are several common load balancer methods used in the industry today.

Also Read: What is Network Sniffing? Attack and Prevention

The load balancer in cloud computing has changed how businesses manage traffic. In the past, you had to buy a physical load balancer device. This was expensive and hard to set up. Today, services like Oracle Cloud or AWS provide these as virtual tools.

A load balancer in cloud computing is highly flexible. If your website suddenly gets a million hits, the cloud provider automatically adds more capacity. This is often called "Elastic Load Balancing." It ensures that your application stays available even during massive traffic surges.

Why is this so useful? It removes the need for manual hardware management. You do not have to worry about cables, power, or physical space. You simply configure the rules in a dashboard, and the load balancer handles the rest.

You might wonder why is load balancing important if you only have a small amount of traffic. The truth is that load balancing is about more than just speed. It is about reliability and security.

Load balancer systems provide a layer of defense. They can help stop Distributed Denial of Service (DDoS) attacks. By spreading traffic, they prevent a flood of fake requests from crashing a single server.

Furthermore, load balancer setups allow for "Zero Downtime" updates. You can take one server offline for maintenance while the others keep running. The users will never know a server was missing. This makes the load balancer server pool a vital part of professional IT infrastructure.

A common question is: is load balancer a reverse proxy? The answer is yes, but with a specific focus. A reverse proxy is any server that sits in front of web servers and forwards client requests to them.

While all load balancers are reverse proxies, not all reverse proxies are load balancers. A simple reverse proxy might just handle encryption or caching for one server. A load balancer specifically focuses on distributing that traffic across multiple servers. Think of the load balancer as a sophisticated version of a reverse proxy designed for scale.

Also Read: What is Public Key Infrastructure (PKI)? Technology and Components

Modern apps often use many small pieces of code instead of one big program. This is called a microservices architecture. A load balancer in microservices is essential for communication.

In this setup, each service might have its own pool of instances. A load balancer ensures that when Service A needs to talk to Service B, it finds an available instance.

How load balancer works in microservices involves a process called "Service Discovery." The load balancer keeps a live list of every small service currently running. This allows the system to be very dynamic. Services can start and stop at any time without breaking the connection.

Deciding between a physical load balancer device and a software solution depends on your budget and needs.

A physical load balancer device is often a high-performance appliance. It has specialized chips designed to handle massive amounts of data. These are common in large corporate data centers where security and raw power are the top priorities.

On the other hand, software-based load balancer solutions run on standard hardware. They are much cheaper and easier to update. Many companies prefer software because it can be integrated into "DevOps" pipelines. This means the code that builds your website can also build your load balancer automatically.

To get the most out of your load balancer network, you must use the right optimization methods.

These load balancer methods transform a simple traffic distributor into a powerful performance engine.

How does the system know if a server is broken? The load balancer performs constant "Health Checks."

The load balancer sends a small "ping" or request to each server every few seconds. If the server says "I am okay," traffic continues. If the server does not respond, the load balancer marks it as "down."

This happens automatically. It prevents users from seeing "404 Error" or "Server Not Found" pages. By the time a human technician realizes a server is down, the load balancer has already protected the users by moving them to a different server.

The load balancer is evolving. We are moving toward "Application Delivery Controllers" (ADCs). These are next-generation load balancers that include firewalls, data compression, and advanced security.

We are also seeing more "Predictive Load Balancing." This uses artificial intelligence to look at past traffic patterns. If the system knows you get more traffic every Friday at 5 PM, it can start preparing the load balancer server pool in advance.

Managing network traffic is a complex task, but a load balancer makes it simple. By acting as a central hub, it ensures that every user gets a fast and reliable experience. Whether you use a physical load balancer device or a load balancer in cloud computing, the benefits are clear. You gain better speed, stronger security, and the ability to grow your business without fear of a crash.

Our team focuses on building robust systems that stand the test of time. We believe that your technology should work for you, not the other way around. By focusing on smart networking and client-centric solutions, we help you stay ahead in a digital world. Let us help you build a network that never sleeps.

Contact our Networking Team Today

The primary purpose is to distribute incoming network traffic across multiple servers. This prevents any single server from becoming a bottleneck and ensures high availability for the application.

Yes, using Global Server Load Balancing (GSLB), a load balancer can route users to the data center that is physically closest to them, which significantly reduces latency.

No, a load balancer can manage many types of traffic, including database queries, email protocols (like SMTP), and DNS requests.

To prevent this, engineers usually deploy load balancers in "High Availability" pairs. If the primary load balancer device fails, the secondary one takes over instantly.

It masks the IP addresses of the backend servers, making it harder for hackers to target them directly. It also provides a central point to apply security patches and filter out malicious traffic.

Surbhi Suhane is an experienced digital marketing and content specialist with deep expertise in Getting Things Done (GTD) methodology and process automation. Adept at optimizing workflows and leveraging automation tools to enhance productivity and deliver impactful results in content creation and SEO optimization.

Share it with friends!

share your thoughts