.webp&w=3840&q=75)

How ClickUp Enables Outcome-Based Project Management (Not Just Task Tracking)

🕓 February 15, 2026

For the past 9 days, we’ve taken you through the essential foundations of AI governance and AI security:

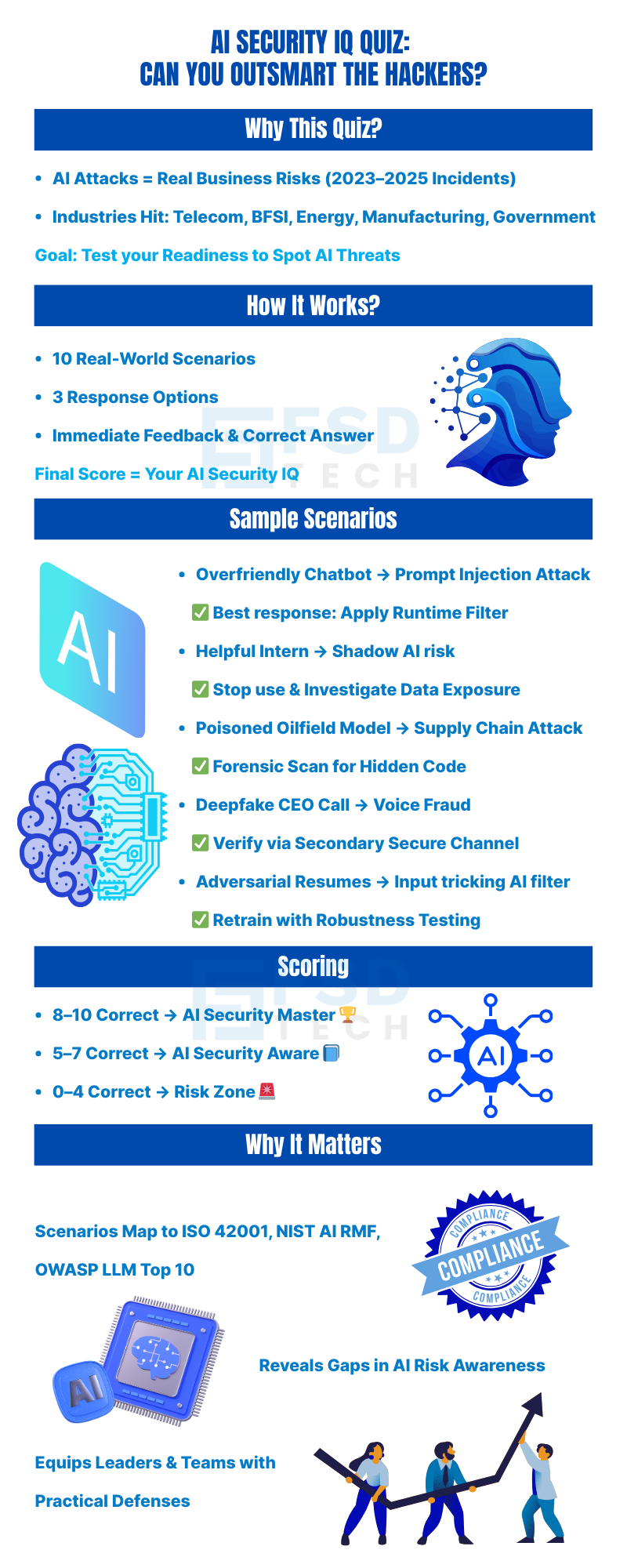

Now it’s time to find out: Have you been paying attention — and could you spot an AI security threat in the wild?

Today’s blog isn’t just a quiz — it’s an interactive learning experience. Every scenario is based on real-world incidents from telecom, BFSI, energy, manufacturing, and government sectors

You’ll get:

A customer service chatbot at a leading bank in Dubai starts giving unusually detailed answers to account-related queries. An attacker has embedded a special phrase into a chat that triggers the bot to retrieve sensitive data from its training database.

Your Response:

A) Disable the chatbot until a model retraining can be scheduled.

B) Apply a runtime filter that blocks sensitive information before sending outputs.

C) Publicly warn all customers that the chatbot is compromised and shut down the banking site.

Correct Answer: B

Explanation:

This is a Prompt Injection AttackState-of-LLM-Applicatio…. Disabling the bot (A) causes service disruption without solving the root problem. Publicly shutting down (C) creates unnecessary panic. PointGuard AI’s Runtime Defense can detect and block these malicious patterns in real-time without downtime.

An intern at your fintech startup uses a free AI summarization tool to prepare investor reports. They upload raw client data that includes transaction records and account balances.

Your Response:

A) Nothing to worry about — summarization tools don’t store data.

B) Immediately stop use, review the tool’s privacy policy, and investigate data exposure.

C) Continue using it but encrypt the PDFs before upload.

Correct Answer: B

Explanation:

This is Shadow AIPointGuard - Six Steps …. Even encrypted uploads may be stored and analyzed by the vendor. AI governance policies must define approved tools and block unapproved AI uploads via security controls like PointGuard AI’s AI Asset Discovery.

An oil & gas company downloads a predictive maintenance AI model from an open-source platform. Weeks later, safety alarms fail during a real equipment fault.

Your Response:

A) Replace the model with a commercial vendor’s version immediately.

B) Conduct a forensic scan of the model for hidden malicious code.

C) Increase manual inspections of all equipment and stop AI use entirely.

Correct Answer: B

Explanation:

This is an AI Supply Chain Attack1- Guide to AI Governan…. The model likely had a backdoor. Replacing it without investigation risks importing similar threats. PointGuard AI’s Model Scanning & AI-BOM Tracking can detect and block such poisoned models before deployment.

A senior accountant receives a call from someone sounding exactly like the CEO, asking to urgently transfer funds to a “partner” account.

Your Response:

A) Proceed — the voice matches.

B) Verify via a secondary channel like encrypted email or in-person approval.

C) Ask the “CEO” security questions only they would know.

Correct Answer: B

Explanation:

This is a Deepfake Voice Attack - Voice biometrics plus MFA on high-value transactions should be standard. PointGuard AI Runtime Monitoring can also detect deep-fake patterns in real-time for voice-enabled AI systems.

A recruitment AI is fooled by resumes with subtle formatting tricks, bypassing filters and ranking unqualified candidates as top choices.

Your Response:

A) Manually review all resumes from now on.

B) Retrain the AI with adversarial robustness testing.

C) Ban PDF resume submissions entirely.

Correct Answer: B

Explanation:

This is an Adversarial Input Attack. Banning PDF (C) is a blunt measure. Manual review (A) removes efficiency benefits. The right move is adversarial training to harden the AI model, which PointGuard AI facilitates via Automated Red Teaming.

Your AI-powered analytics platform integrates with a third-party API to enrich customer profiles. A security audit finds the API endpoint is returning more data than requested, including PII.

Your Response:

A) Ignore — more data is better.

B) Stop the API calls until scope and security are fixed.

C) Add extra encryption for API responses.

Correct Answer: B

Explanation:

This is Insecure Integration Over-sharing APIs violate data minimization principles under GDPR/UAE laws. Use PointGuard AI API Monitoring to detect anomalies and enforce strict data-return policies.

A financial analysis AI, intended to process only public market data, starts pulling from internal financial reports.

Your Response:

A) Treat as a feature upgrade.

B) Reassess permissions and enforce least privilege access.

C) Widen access so more staff can benefit.

Correct Answer: B

Explanation:

This is a Misconfigured Access Control problem. Least privilege and strict role-based access are required under ISO 42001. PointGuard AI’s AI Security Posture Management scans for such over-permissioned AI models.

A competitor sends thousands of carefully crafted queries to your AI SaaS product to reverse-engineer its behavior.

Your Response:

A) Increase API rate limits and detect abnormal usage patterns.

B) Do nothing — model replication is unavoidable.

C) Move the model to a public repository for transparency.

Correct Answer: A

Explanation:

This is a Model Extraction Attack. It’s preventable with rate limiting, usage pattern monitoring, and output watermarking. PointGuard AI helps detect and mitigate these behaviors before your IP is stolen

A telecom’s 5G traffic-routing AI is hit with adversarial packets, tricking it into misclassifying high-priority traffic as low-priority.

Your Response:

A) Switch to manual routing until further notice.

B) Deploy adversarial robustness and real-time anomaly detection.

C) Limit all traffic routing decisions to non-AI systems.

Correct Answer: B

Explanation:

This is a Model Evasion Attack in telecom networks1- Guide to AI Governan…. PointGuard AI provides real-time AI Runtime Defense and adversarial testing to harden routing models against such exploits.

An AI summarization system for legal contracts omits key clauses in certain jurisdictions due to training bias.

Your Response:

A) Deploy fairness testing and retrain the model with balanced datasets.

B) Accept minor errors as AI learning limitations.

C) Stop using AI in legal contexts entirely.

Correct Answer: A

Explanation:

This is Bias in AI Decision-Making1- Guide to AI Governan…. Fairness tools like IBM AIF360 and PointGuard AI’s bias detection modules can correct such imbalances without scrapping the system.

8–10 Correct: AI Security Master

You understand threats, defenses, and governance. You’re ready to integrate AI safely — and PointGuard AI can automate most of the heavy lifting for you.

5–7 Correct: AI Security Aware

You’re on the right track, but there are gaps in your understanding. Consider a PointGuard AI Security

Assessment to identify weak points.

0–4 Correct: AI Security Risk Zone

You’re vulnerable. Start by revisiting Days 1–9 in this series and deploy foundational AI governance tools immediately.

Book a Free AI Risk Assessment from FSD-Tech to see how your real systems would perform under these scenarios. Book Now

It’s an interactive set of 10 real-world AI attack scenarios where you choose the best response. You’ll see instant feedback, correct answers, and explanations to help you understand modern AI security risks.

Because AI attacks are no longer just a tech team problem — they’re a business risk. This quiz helps you quickly see if you can identify threats before they damage your company, your customers, or your reputation.

It’s designed for business leaders, security teams, compliance officers, and anyone who uses or manages AI systems — whether you’re in BFSI, energy, healthcare, manufacturing, or government.

Traditional security quizzes focus on phishing or password safety. This quiz focuses on AI-specific attacks like prompt injection, deepfakes, model poisoning, and supply chain compromises — threats most people aren’t trained to spot.

You’ll learn how hackers can target your AI systems, how to respond effectively, and how tools like PointGuard AI can prevent these attacks in real time.

No. The scenarios are written in plain language, and the explanations are simple enough for non-technical decision-makers to understand.

Around 10–15 minutes — and you’ll walk away with a clearer understanding of AI risks than most boardroom presentations can give you.

It helps identify gaps in your team’s AI risk awareness. If you or your colleagues miss certain scenarios, that’s a clear sign of where you need training or policy updates.

You’ll know your AI Security IQ score — whether you’re an AI Security Master, AI Security Aware, or in the AI Security Risk Zone — and exactly what steps to take next.

That’s not failure — it’s a signal. You can use your results to start training, deploy the right AI governance tools, and request a free AI Risk Assessment from PointGuard AI.

Every scenario is based on real incidents from 2023–2025 across industries, mapped to recognized security frameworks like ISO 42001, NIST AI RMF, and OWASP LLM Top 10.

Yes — in fact, we encourage you to post it on LinkedIn with #FSD-Tech-PointGuardAIChallenge and tag your colleagues so they can take the quiz too.

It’s not a complete solution, but it’s a starting point. It raises awareness, sparks discussion, and shows where AI governance tools like PointGuard AI can fill the gaps.

No. Small and medium businesses are also at risk — especially if they use AI tools without formal approval (Shadow AI). The quiz can be an eye-opener for teams of any size.

Share your results internally, review missed answers with your security team and consider an AI security readiness audit with FSD-Tech and PointGuard AI to test your real systems.

Mohd Elayyan is an entrepreneur, cybersecurity expert, and AI governance leader bringing next-gen innovations to the Middle East and Africa. With expertise in AI Security, Governance, and Automated Offensive Security, he helps organizations stay ethical, compliant, and ahead of threats.

Share it with friends!

share your thoughts