What Exactly is AI Governance?

In the simplest terms:

AI governance is the set of rules, processes, and oversight mechanisms that guide how AI is designed, built, deployed, and monitored.

Think of it as traffic laws for AI Systems— defining who can drive, how fast, and what happens if rules are broken.

Why it matters in the GCC & UAE

- AI adoption is exploding in sectors like BFSI, oil & gas, logistics, and smart cities.

- Regulatory frameworks like the UAE AI Strategy 2031 and Saudi Arabia’s National Strategy for Data & AI (NSDAI) are making governance a compliance priority.

- The risks are multi-dimensional — bias in decision-making, data breaches, and non-compliance fines that can run into millions.

Globally, guidance comes from frameworks like:

- EU AI Act – Strict classification and risk-tier governance.

- NIST AI Risk Management Framework (RMF) – A US standard for AI risk assessment and control.

- ISO/IEC 42001:2023 – The first international AI management system standard.

- These Above frameworks provide structured governance blueprints

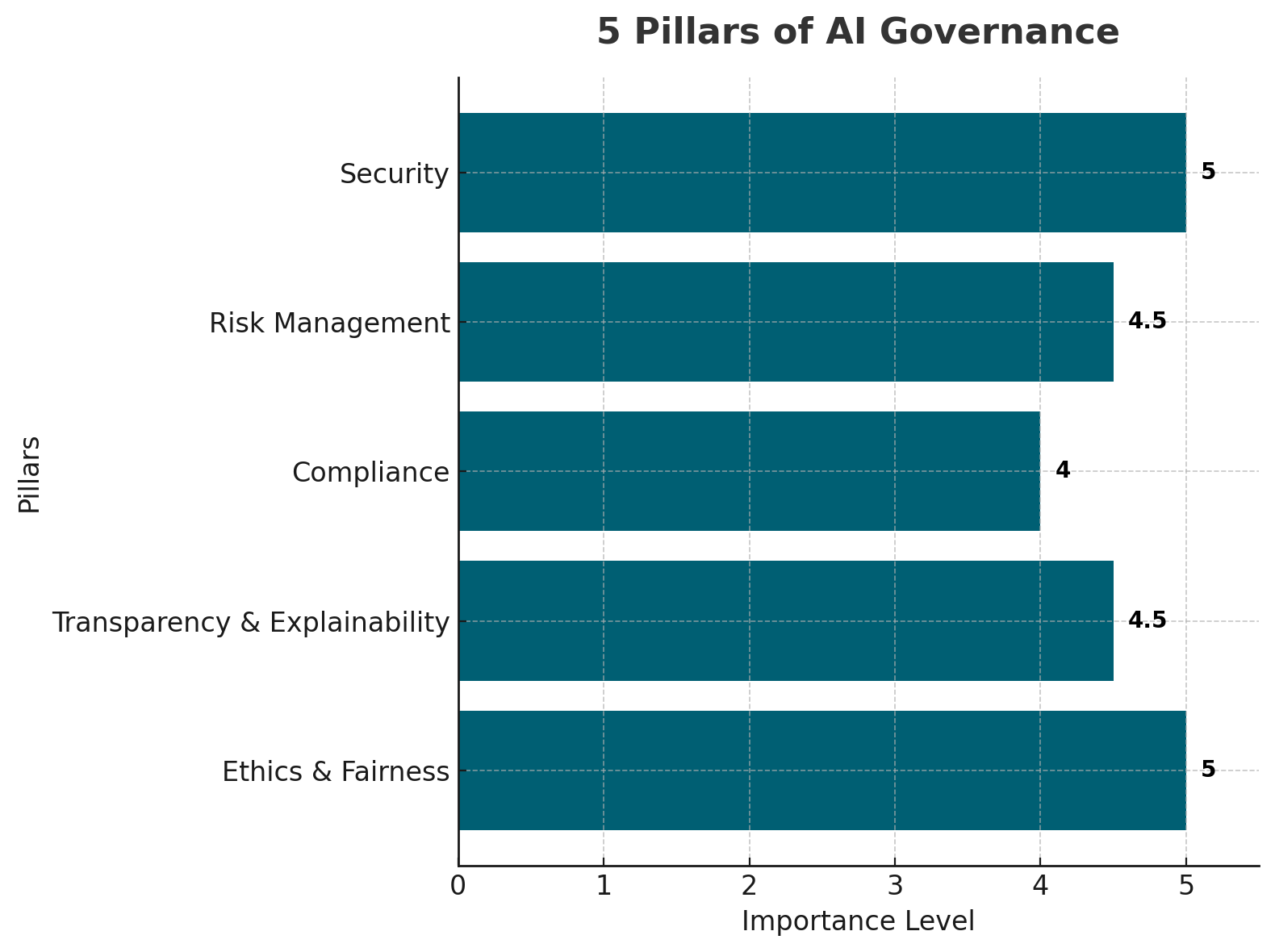

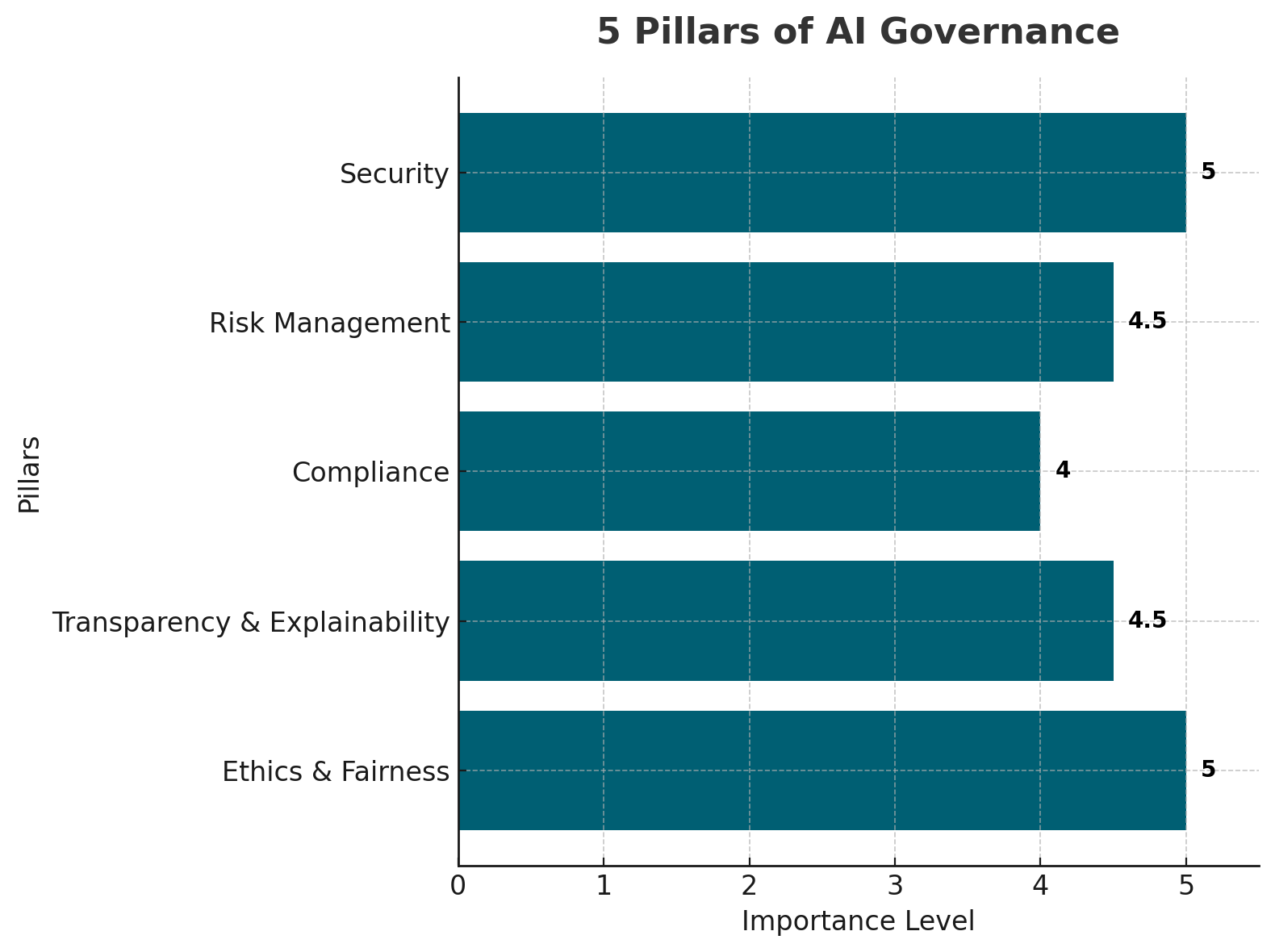

The 5 Core Pillars of AI Governance

These pillars form the foundation of an effective AI governance program:

1. Ethics & Fairness

- Avoid bias in AI-driven decision-making.

- Use fairness-testing tools like IBM AI Fairness 360 or Microsoft’s Fairlearn to identify and mitigate discriminatory patterns.

- Example: A UAE bank’s AI-driven loan approval system must not unintentionally favor or reject applicants based on nationality, gender, or location.

2. Transparency & Explainability

- Ensure AI decisions can be explained in human terms.

- Critical in sectors like healthcare, where life-or-death decisions may be influenced by AI outputs.

- Example: An AI diagnostic tool in a Dubai hospital must explain why it recommended a specific treatment plan.

3. Compliance

- Meet legal and regulatory requirements such as UAE Data Protection Law, GDPR, and the EU AI Act.

- Ensure alignment with ISO 42001 and NIST AI RMF standards.

- Example: GCC telecom companies must ensure AI-powered customer analytics comply with cross-border data transfer laws.

4. Risk Management

- Identify, assess, and mitigate risks before deployment.

- Maintain a live AI risk register that tracks vulnerabilities like prompt injection or supply chain poisoning.

5. Security

- Protect models against manipulation, theft, or unsafe outputs.

- Implement runtime monitoring to detect anomalies in AI behavior.

- Example: A chatbot in a KSA e-commerce platform should not be tricked into leaking sensitive customer data.

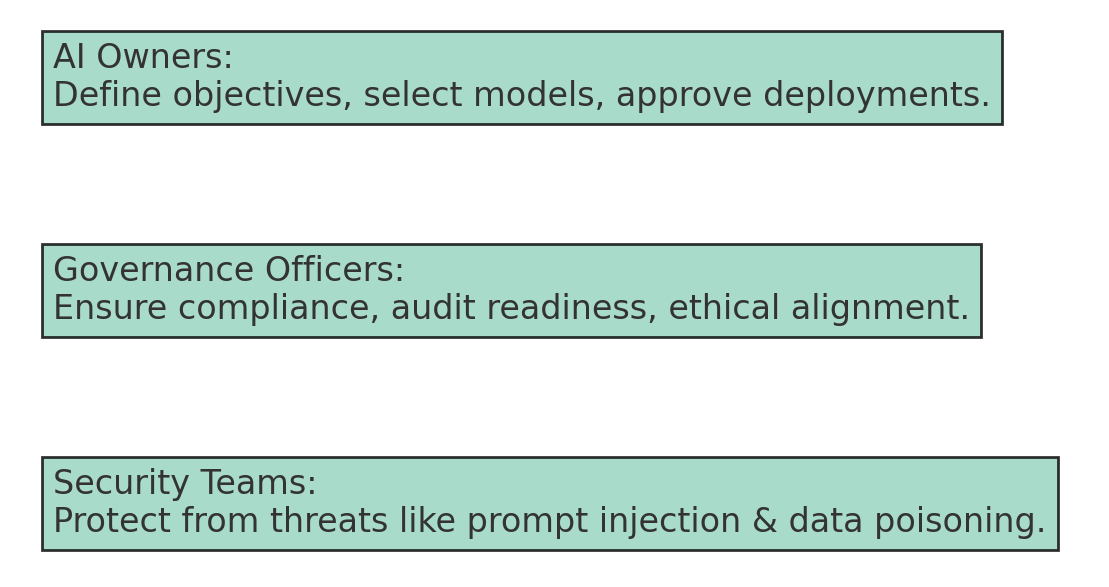

The Three Key Roles in AI Governance

Clear accountability is the difference between proactive prevention and reactive damage control.

AI Owners

- Define project objectives, select models, and approve deployments.

- Ensure AI aligns with both business goals and compliance requirements.

Governance Officers

- Oversee ethical compliance, audit readiness, and regulatory alignment.

- Maintain documentation and liaise with regulators.

Security Teams

- Protect against technical threats like prompt injection, model evasion, and data poisoning.

- Deploy runtime defenses and monitor model behavior in real time.

Key Roles in AI Governance

Why Clear Responsibilities Matter

Without defined ownership, AI governance becomes a blame game:

- Who’s responsible if a chatbot leaks sensitive information?

- Who approves updates after retraining?

- Who ensures ongoing compliance with ISO 42001?

In mature governance models, every AI asset has:

- A named owner

- Governance responsibilities written into job descriptions

- Audit trails for every deployment and update

How PointGuard AI Simplifies AI Governance

FSD-Tech, in partnership with PointGuard AI, offers an end-to-end AI governance and security solution tailored for GCC & UAE enterprises:

Automated Asset Discovery

- Detect all AI models, datasets, and pipelines in use — including shadow AI.

Compliance Dashboards

- Map AI assets to ISO 42001, NIST AI RMF, and OWASP LLM Top 10.

Policy Enforcement

- Block deployments that fail governance checks.

- Example: Prevent a new predictive model in a refinery from going live if it hasn’t passed security validation.

Making AI Governance Work in GCC & UAE

Adopting AI governance early is not just about compliance — it’s a strategic advantage.

Benefits include:

- Risk reduction — Prevent AI disasters before they occur.

- Trust building — Show customers and partners you’re responsible with data and AI.

- Regulatory alignment — Stay ahead of tightening AI compliance laws.

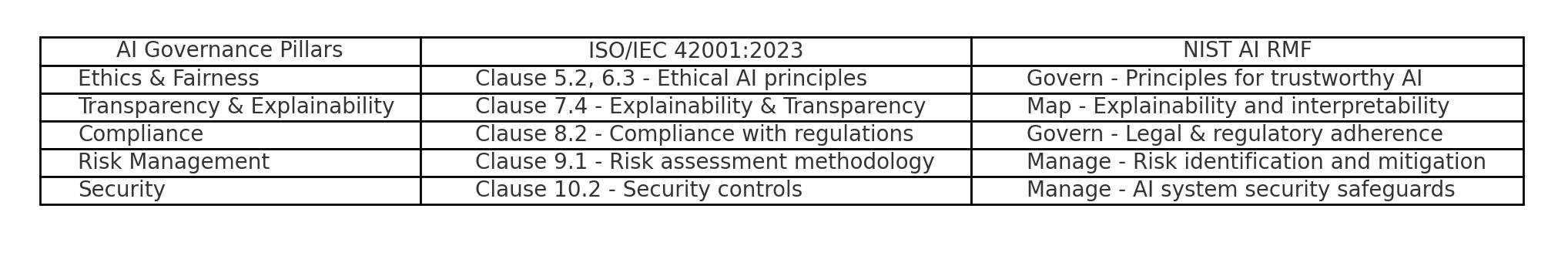

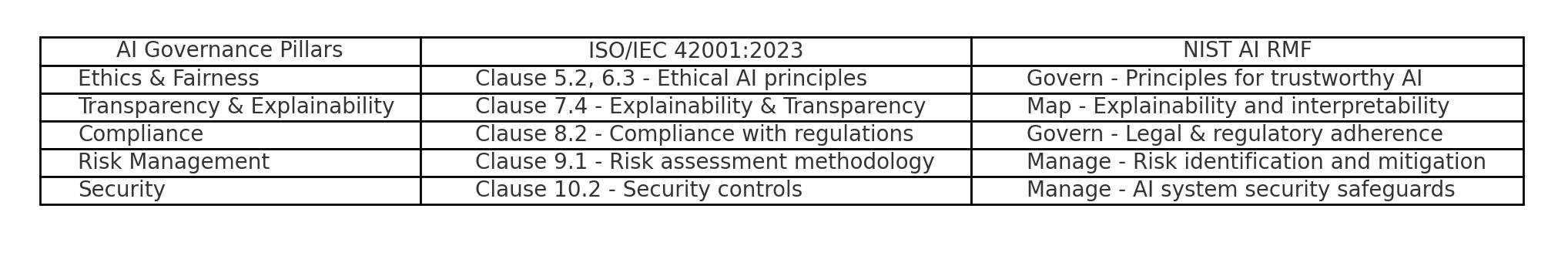

ISO-IEC 42001-2023 & NIST AI RMF Mapping To AI Governance Pillars

FAQ

Q1: What is AI governance in simple terms?

AI governance is the comprehensive framework of rules, processes, and oversight mechanisms that ensure artificial intelligence systems are ethical, compliant, transparent, and secure from the moment they are conceived to the day they are retired. This includes how AI is designed, trained, deployed, monitored, and eventually decommissioned.

It goes beyond just technical controls — it ensures that AI aligns with legal requirements, ethical values, and organizational goals, reducing risks such as bias, security breaches, and compliance failures.

Q2: Why is AI governance important in GCC and UAE?

In the GCC and UAE, AI adoption is accelerating rapidly in high-value sectors such as banking, oil & gas, healthcare, logistics, and government services. While this creates opportunities for innovation and efficiency, it also brings increased exposure to risks:

- Bias in AI decisions that can impact customer fairness.

- Non-compliance with emerging AI regulations such as the UAE Data Protection Law or Saudi NSDAI framework.

- AI-powered cyber threats, including prompt injection attacks and model manipulation.

AI governance ensures that these risks are proactively managed, keeping AI trustworthy, explainable, and compliant.

Q3: What are the five pillars of AI governance?

The five core pillars of AI governance, as applied by global frameworks like ISO 42001 and NIST AI RMF, are:

- Ethics & Fairness – Avoiding bias and ensuring equitable decision-making.

- Transparency & Explainability – Making AI decisions understandable to humans.

- Compliance – Meeting local and international legal requirements.

- Risk Management – Identifying and prioritizing AI risks early.

- Security – Preventing AI models from being manipulated, stolen, or producing unsafe outputs.

Q4: How does AI governance relate to ISO 42001 and NIST AI RMF?

- ISO/IEC 42001:2023 provides a globally recognized standard for AI management systems, outlining requirements for risk management, ethical alignment, and governance processes.

- NIST AI Risk Management Framework (AI RMF) offers a flexible, principles-based approach to identifying, managing, and mitigating AI-related risks.

AI governance programs in GCC/UAE can use these frameworks as benchmarks for compliance and operational excellence.

Q5: Who is responsible for AI governance in an organization?

AI governance is multi-stakeholder:

- AI Owners – Define business objectives, select AI models, approve deployments.

- Governance Officers – Ensure compliance, audit readiness, and ethical standards are met.

- Security Teams – Protect AI systems from technical threats such as data poisoning, prompt injection, and model theft.

Q6: What happens if AI governance is ignored?

Without governance:

- Financial Loss – Regulatory fines, operational disruptions, and fraud losses.

- Reputational Damage – Public backlash from biased or unethical AI decisions.

- Legal Penalties – Non-compliance with AI-specific laws in GCC and international markets.

Q7: How does AI governance prevent AI disasters?

By implementing:

- Pre-deployment testing (red teaming, bias detection).

- Continuous runtime monitoring to detect anomalies and threats.

- Strict policy enforcement to ensure AI only operates within safe and ethical boundaries.

Q8: What is shadow AI, and why is it risky?

Shadow AI refers to AI tools or models deployed without formal governance approval. Risks include:

- Compliance violations (GDPR, UAE Data Protection, ISO standards).

- Security gaps that hackers can exploit.

- Unvetted models introducing bias or unsafe behavior.

Q9: How does PointGuard AI help with governance?

PointGuard AI supports governance by:

- Automating asset discovery – Mapping all AI models, datasets, and APIs in use.

- Compliance dashboards – Aligning AI assets to ISO 42001, NIST AI RMF, and local GCC standards.

- Policy enforcement – Blocking deployments that violate governance rules.

Q10: What is the role of AI Owners in governance?

AI Owners are accountable for:

- Defining goals for AI use cases.

- Selecting appropriate models and datasets.

- Approving deployment only after governance and security checks.

Q11: How can AI governance improve trust?

Transparent, ethical AI practices build trust among:

- Customers – Confident that decisions are fair.

- Partners – Knowing compliance is maintained.

- Regulators – Seeing clear audit trails and compliance reports.

Q12: Can AI governance reduce compliance costs?

Yes — proactive governance:

- Streamlines audits by maintaining complete documentation.

- Reduces penalties by preventing violations before they occur.

- Minimizes downtime caused by AI-related security breaches.

Q13: How do GCC-specific laws impact AI governance?

- UAE Data Protection Law – Requires data transparency and accountability.

- Saudi NSDAI AI Policy – Demands AI systems be ethical, secure, and explainable.

- Cross-border compliance – For multinational GCC organizations, governance ensures AI aligns with EU AI Act and other international standards.

Q14: Is AI governance only for large organizations?

No — small and medium enterprises (SMEs) also need AI governance to:

- Manage operational risks.

- Meet compliance requirements.

- Avoid reputational harm from unintended AI outcomes.

Q15: What’s the future of AI governance in GCC & UAE?

Expect:

- Stricter regulations with mandatory AI audits.

- Greater alignment with international AI standards like ISO/IEC 42001 and NIST AI RMF.

- Wider adoption of automated governance tools like PointGuard AI to scale compliance without slowing innovation.

.webp&w=3840&q=75)

share your thoughts